We tested Glaze art cloaking

We seem to have bypassed it, and are sharing our findings with the developers.

In parallel to our opt-out approach that so far has seen 80m images opted out of AI training in a few months, a team of researchers at the University of Chicago, in tandem with artists such as Karla Ortiz, have assumed a different approach to artist protection they call Glaze.

Glaze is a novel and clever mechanism to scramble an artwork for AI models while maintaining its integrity for the human observer. You can read more about the technique in this New York Times feature, and now download it to experiment with.

We are always questioning our approach to representing artists, and so were excited to test out a different method from our own.

We applaud the motivations and efforts behind the project, but regrettably it appears that it took us less than an hour to bypass Glaze. We have shared our findings with the project developers, and expect that less pro-consent actors will not take long to figure out what we did. Hopefully these findings can benefit the project moving forward.

Results

We decided to test on Salvador Dali, who has a very distinctive style.

We collected eight public domain Dali artworks.

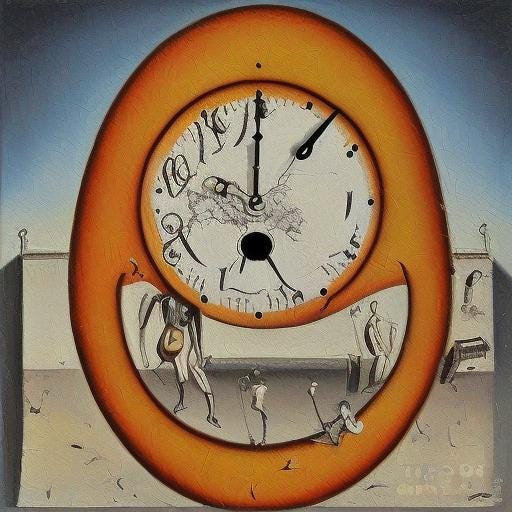

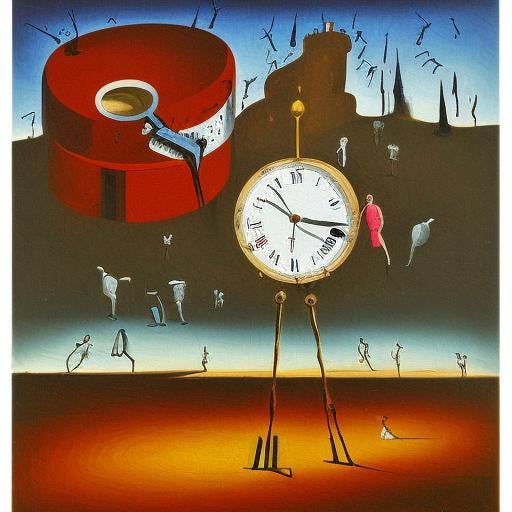

We then glazed those artworks using default settings (left side of the image). We then also applied a deglazing process to those works (right side of the image). Notice that the deglazing removes the artifacts intended to disrupt AI systems.

We fine tuned two image models, one on the glazed artworks, and one on the deglazed artworks. We used a generic prompt “ukj style painting of a clock”, with no reference to Dali. The ‘ukj’ in the prompt is a placeholder, a representation of style that Glaze is designed to thwart.

Glazed generations.

This is a subjective matter, but to us even the glazed outputs communicate Dali style, although traces of the matte-like artifacts are visible.

DeGlazed generations.

These generations appear to clearly convey the style of Dali. Showing that the deglazing method we used did, in fact, work to remove the benefits of using Glaze.

This is a subjective matter, but we feel the results are convincing.

While we share an ideal of fully consenting opt-in AI training, until that is a reality there are important debates to be had about the most effective approach to offer artists to give them more control over their work in a dynamic and chaotic AI field.

To some extent one could argue that both our approach and Glaze are opt-outs, of sorts. Our approach has so far been to establish artist consent claims and deliver them to AI organizations training models, and the Glaze approach is a valiant attempt to modulate the art files themselves, with the aspiration of making them untrainable.

Comparisons in approach

To compare the two approaches, we weighed comparisons on a few grounds:

Effectiveness: This article proposes that Glaze can be bypassed in its current form. If one is to argue that glazing is a form of opt-out request, its effectiveness is perhaps equivalent to making an opt-out claim.

Time commitment: It takes 20 minutes on a beefy macbook m2 processor to Glaze an image, much longer on less powerful devices. Opt-outs are less time intensive.

Repeat commitment: Opting out is a one-time commitment per image link removed. With Glaze, every new deglazing method that appears will force the researchers to adapt for it, and artists will have to repeat the process.

Carbon footprint: Using our 80M image number, it would take 26.6M hours of compute to apply Glaze. That’s a lot of carbon to expend, and would be necessary for each new version.

Collective action 1: We feel collective action is essential to the success of opt-out campaigns. With bulk opt-outs, we were able to remove tens of millions of images on behalf of large partners within minutes of verifying their organizations. Decentralizing by having artists opt-out through disparately altering images may lose some of these benefits.

Collective action 2: To apply glaze to that many images, our partners would have to iterate through their catalogs, applying glaze 1 by 1 and incurring at a minimum, the compute costs above. If they did it all on one machine, it would take nearly 3,000 years to complete.

We have shared our findings with the Glaze team, and share their commitment to offering artists tools to assert agency over their work in the AI era. We hope our tests prove useful and will assist if and where we can.

xSpawning